Today at Build 2022, Microsoft unveiled Project Volterra, a device powered by Qualcomm’s Snapdragon platform that’s designed to let developers explore “AI scenarios” via Qualcomm’s new Snapdragon Neural Processing Engine (SNPE) for Windows toolkit. The hardware arrives alongside support in Windows for neural processing units (NPUs), or dedicated chips tailored for AI- and machine learning-specific workloads.

Dedicated AI chips, which speed up AI processing while reducing the impact on battery, have become common in mobile devices like smartphones. But as apps like AI-powered image upscalers come into wider use, manufacturers have been adding such chips to their laptop lineups. M1 Macs feature Apple’s Neural Engine, for instance, and Microsoft’s Surface Pro X has the SQ1 (which was co-developed with Qualcomm). Intel at one point signaled it would offer an AI chip solution for Windows PCs, but — as the ecosystem of AI-powered Arm apps is well-established, thanks to iOS and Android — Project Volterra appears to be an attempt to tap it rather than reinvent the wheel.

It’s not the first time Microsoft has partnered with Qualcomm to launch AI developer hardware. In 2018, the companies jointly announced the Vision Intelligence Platform, which featured “fully integrated” support for computer vision algorithms running via Microsoft’s Azure ML and Azure IoT Edge services. Project Volterra offers evidence that, four years later, Microsoft and Qualcomm remain bedfellows in this arena, even after the reported expiration of Qualcomm’s exclusivity deal for Windows on Arm licenses.

“We believe in an open hardware ecosystem for Windows giving [developers] more flexibility and more options as well as the ability to support a wide range of scenarios,” Panos Panay, the chief product officer of Windows and devices at Microsoft, said in a blog post. “As such, we are always evolving the platform to support new and emerging hardware platforms and technologies.”

Microsoft’s Project Volterra hardware, which seeks to foster AI app development with Windows on Arm.

Arriving later this year, Microsoft says (somewhat hyperbolically) that Project Volterra will come with a neural processor that has “best-in-class” AI computing capacity and efficiency. The primary chip will be Arm-based, supplied by Qualcomm, and will enable developers to build and test Arm-native apps alongside tools including Visual Studio, VSCode, Microsoft Office, and Teams.

Project Volterra is the harbinger of an “end-to-end” developer toolchain for Arm-native apps from Microsoft, as it turns out, which will span the full Visual Studio 2022, VSCode, Visual C++, NET 6, Windows Terminal, Java, Windows Subsystem for Linux, and Windows Subsystem for Android (for running Android apps). Previews for each component will begin rolling out in the next few weeks, with additional open source projects that natively target Arm to come — including Python, node, git, LLVM, and more.

As for the SNPE for Windows, Panay said that it’ll allow developers to execute, debug, and analyze the performance of deep neural networks on Windows devices with Snapdragon hardware as well as integrate the networks into apps and other code. Complimenting the SNPE is the newly released Qualcomm Neural Processing SDK for Windows, which provides tools for converting and executing AI models on Snapdragon-based Windows devices in addition to APIs for targeting distinct processor cores with different power and performance profiles.

The new tooling from Qualcomm benefits devices beyond Project Volterra, particularly laptops built on Qualcomm’s recently debuted Snapdragon 8cx Gen 3 system-on-chip. Engineered to compete against Apple’s Arm-based silicon, the Snapdragon 8cx Gen 3 boasts an AI accelerator, the Hexagon processor, that can be used to apply AI processing to photos and video.

“The Qualcomm Neural Processing SDK for Windows, combined with Project Volterra when available, will drive improved and new Windows experiences leveraging the powerful and efficient performance of the Qualcomm AI Engine, part of the Snapdragon compute platform,” a Qualcomm spokesperson told TechCrunch via email. “Qualcomm AI Engine” refers to the combined AI processing capabilities of CPU, GPU and digital signal processor components (e.g., Hexagon) in top-of-the-line Snapdragon system-on-chips.

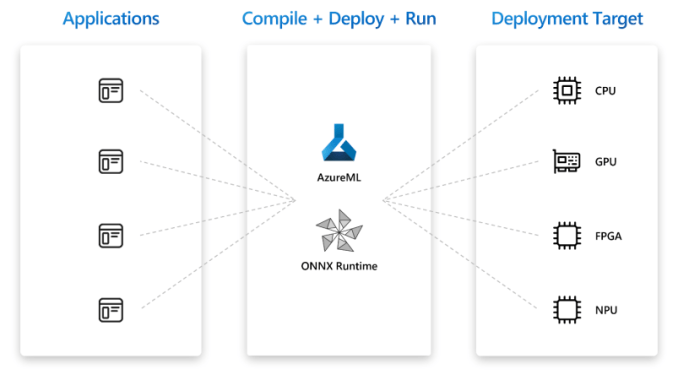

There’s a cloud component to Project Volterra, too, called hybrid loop. Microsoft describes it a “cross-platform development pattern” for building AI apps that span the cloud and edge, with the idea being that developers can make runtime decisions about whether to run AI apps on Azure or a local client. Hybrid loop also confers the ability to dynamically shift the load between the client and the cloud, should developers need the additional flexibility.

Image Credits: Microsoft

Panay says that hybrid loop will be exposed through a prototype “AI toolchain” in Azure Machine Learning and a new Azure Execution Provider in the ONNX Runtime, the open source project aimed at accelerating AI across frameworks, operating systems, and hardware.

“Increasingly, magical experiences powered by AI will require enormous levels of processing power beyond the capabilities of traditional CPU and GPU alone. But new silicon like NPUs will add expanded capacity for key AI workloads,” Panay said. “Our emerging model of hybrid compute and AI, along with NPU enabled devices creates a new platform for [developers] to build high ambition apps leveraging incredible amounts of power … With native Arm64 Visual Studio, .NET support, and Project Volterra coming later this year, we are releasing new tools to help [developers] take the first step on this journey.”